Zoom AI, machine learning, deep learning

Zoom session 1, part 2

Topic: Introduction to general concepts of machine learning.

Slides

A few notes from the slides

Artificial intelligence and machine learning

Artificial intelligence is a vast field: any system mimicking animal intelligence falls in its scope.

Machine learning (ML) is a subfield of artificial intelligence that can be defined as computer programs whose performance at a task improves with experience.

Since this experience comes in the form of data, ML consists of feeding vast amounts of data to algorithms to strengthen pathways.

Example in image recognition

Coding all the possible ways—pixel by pixel—that a picture can represent a certain object is an impossibly large task. By feeding examples of images of that object to a neural network however, we can train it to recognize that object in images that it has never seen (without explicitly programming how it does this!).

Neural networks

Neurons

Artificial neural networks (ANN) are one of the machine learning models (other models include decision trees or Bayesian networks). Their potential and popularity has truly exploded in recent years and this is what we will focus on in this course. In the next lesson, you will watch an excellent video by 3Blue1Brown that will walk you through the concepts of neural networks. But in short, artificial neural networks are a series of layered units mimicking the concept of biological neurons: inputs are received by every unit of a layer, computed, then transmitted to units of the next layer. In the process of learning, experience strengthens some connections between units and weakens others.

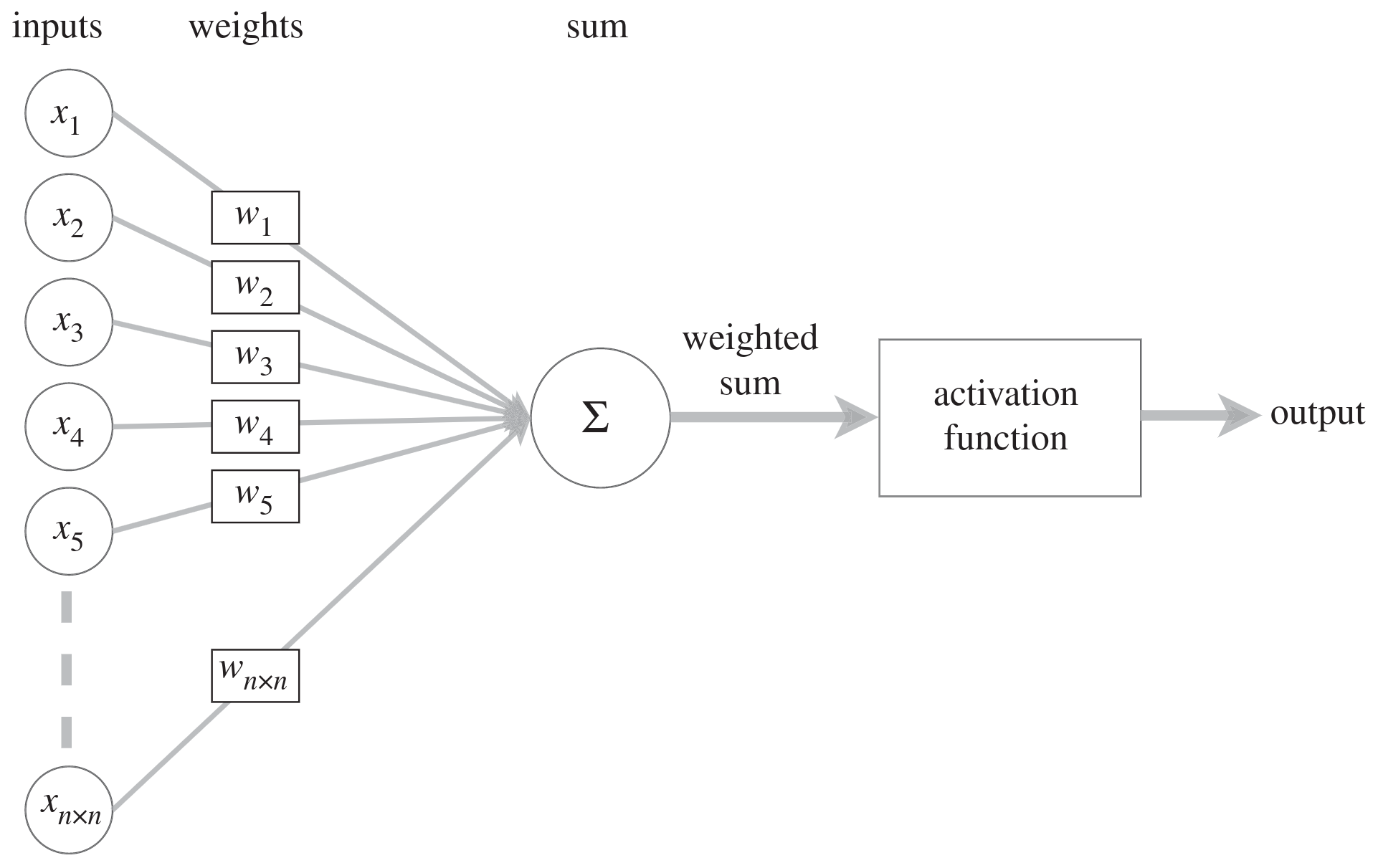

In biological networks, the information consists of action potentials (neuron membrane rapid depolarizations) propagating through the network. In artificial ones, the information consists of tensors (multidimensional arrays) of weights and biases: each unit passes a weighted sum of an input tensor with an additional—possibly weighted—bias through an activation function before passing on the output tensor to the next layer of units.

Note:

the bias allows to shift the output of the activation function to the right or to the left (i.e. it creates an offset).

Schematic of a biological neuron:

Schematic of an artificial neuron:

Activation

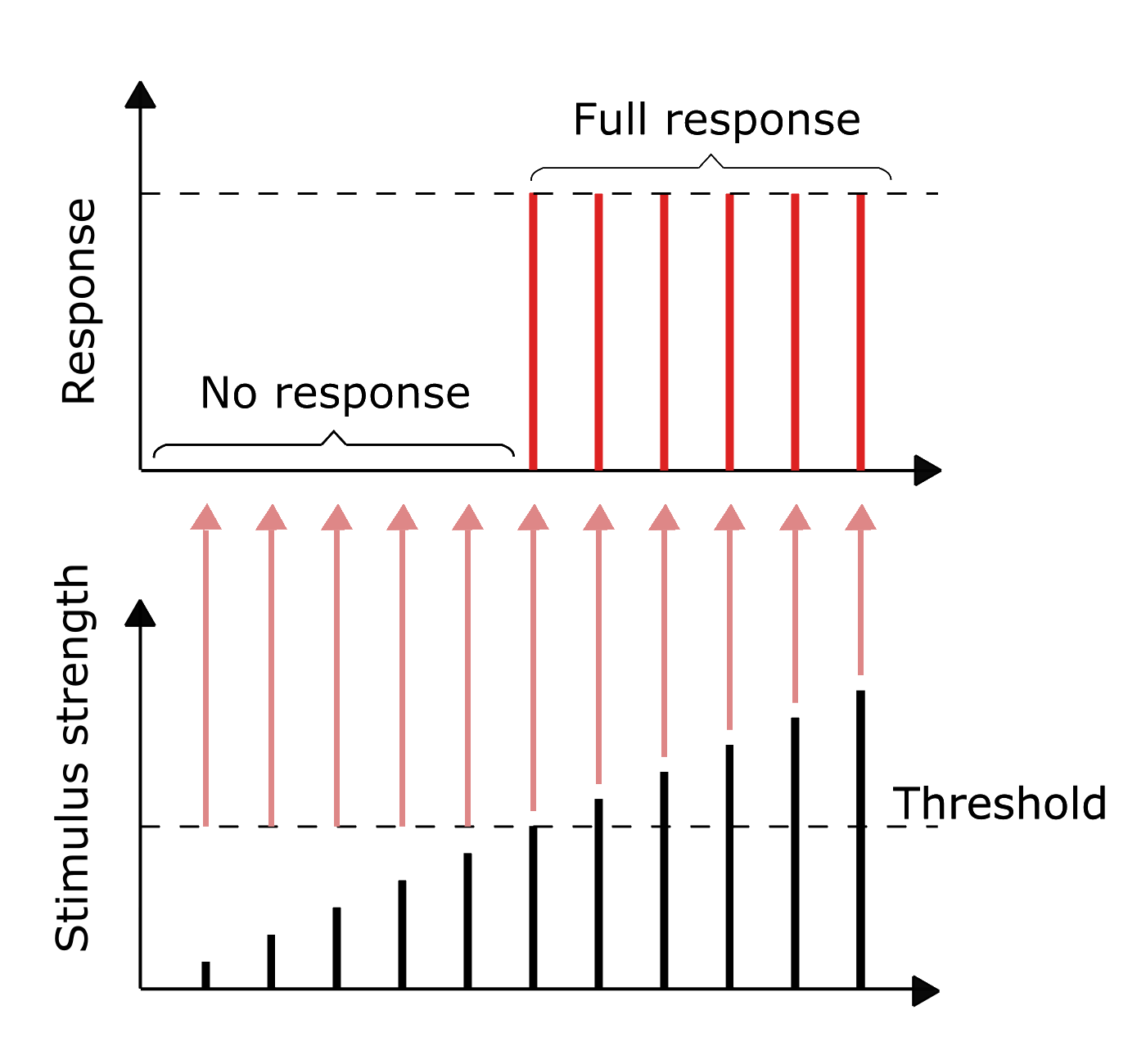

The information in biological neurons is an all-or-nothing electrochemical pulse or action potential. Greater stimuli don’t produce stronger signals but increase firing frequency. In contrast, artificial neurons pass the computation of their inputs through an activation function and the output can take any of the values possible with that function.

Threshold potential in biological neurons:

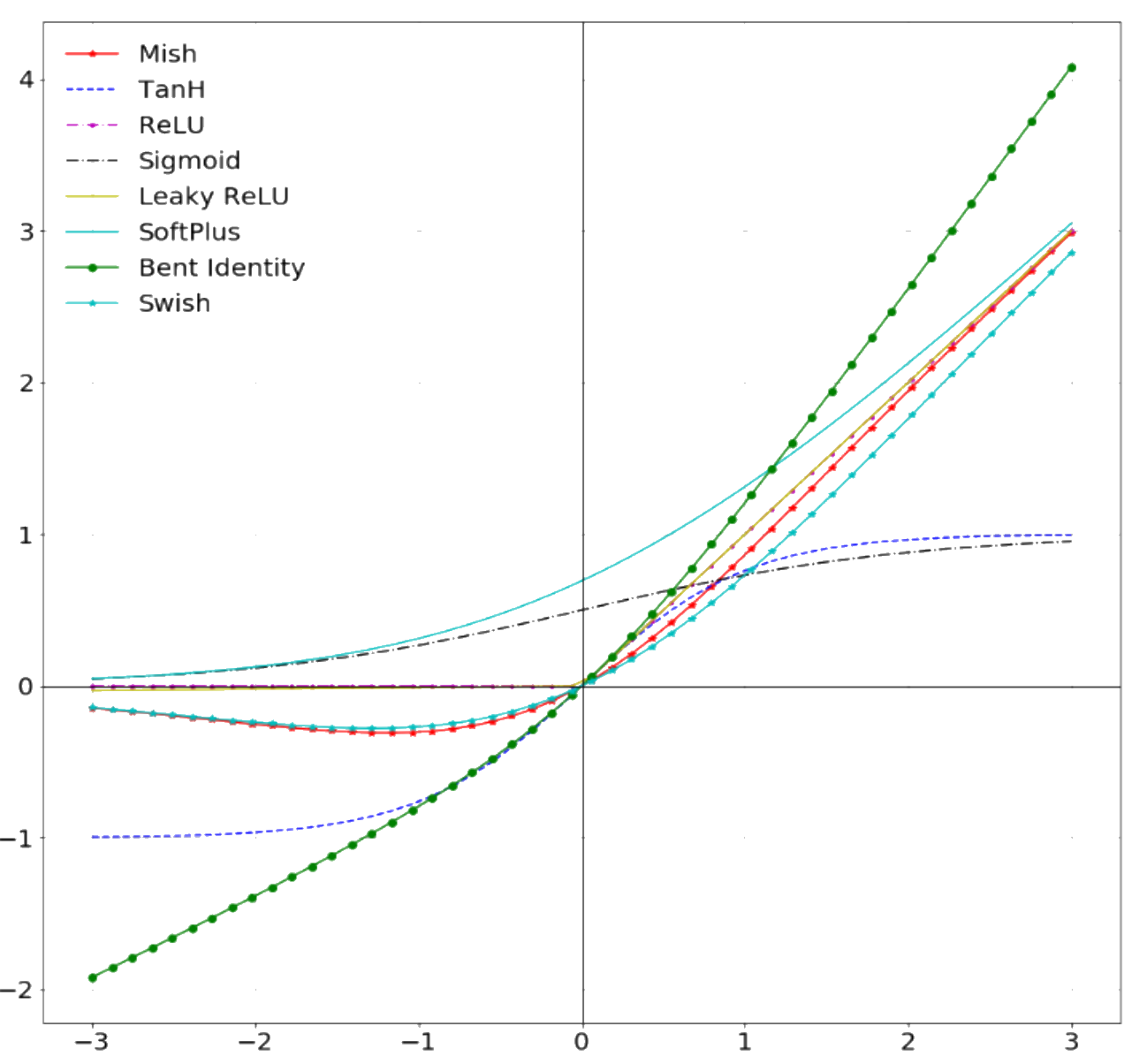

Some of the most common activation functions in artificial neurons:

Which activation function to use depends on the type of problem and the available computing budget. Some early functions have fallen out of use while new ones have emerged (e.g. sigmoid got replaced by ReLU which is easier to train).

Networks

While biological neurons are connected in extremely intricate patterns, artificial ones follow a layered structure. Another difference in complexity is in the number of units: the human brain has 65–90 billion neurons. ANN have much fewer units.

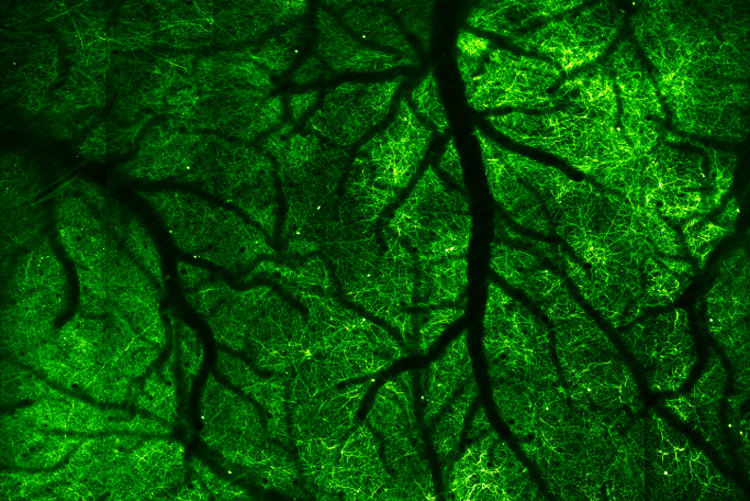

Neurons in mouse cortex:

Image by Na Ji, UC Berkeley

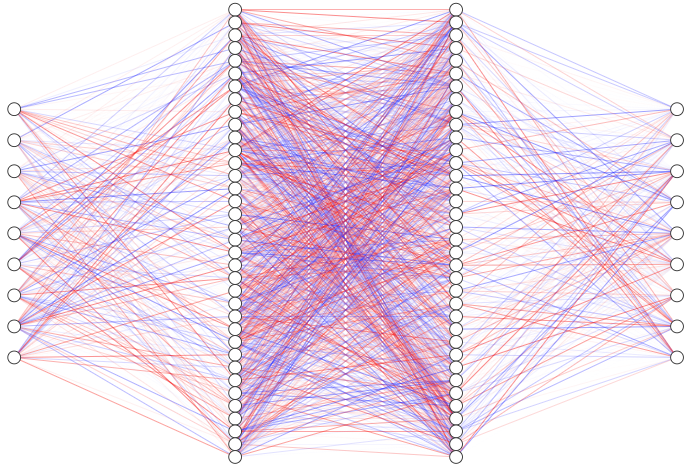

Neural network with 2 hidden layers:

Learning

The process of learning in biological NN happens through neuron death or growth and through the creation or loss of synaptic connections between neurons. In ANN, learning happens through optimization algorithms such as gradient descent which minimize cross entropy loss functions by adjusting the weights and biases connecting each layer of neurons over many iterations (cross entropy is the difference between the predicted and the real distributions).

We talk about deep learning whenever a neural network has two or more hidden layers.