Zoom The MNIST

Zoom session 2 — part 3

Topic: In this session, we will explore the MNIST dataset with PyTorch.

The MNIST dataset

The MNIST is a classic dataset commonly used for testing machine learning systems. It consists of pairs of images of handwritten digits and their corresponding labels.

The images are composed of 28x28 pixels of greyscale RGB codes ranging from 0 to 255 and the labels are the digits from 0 to 9 that each image represents.

There are 60,000 training pairs and 10,000 testing pairs.

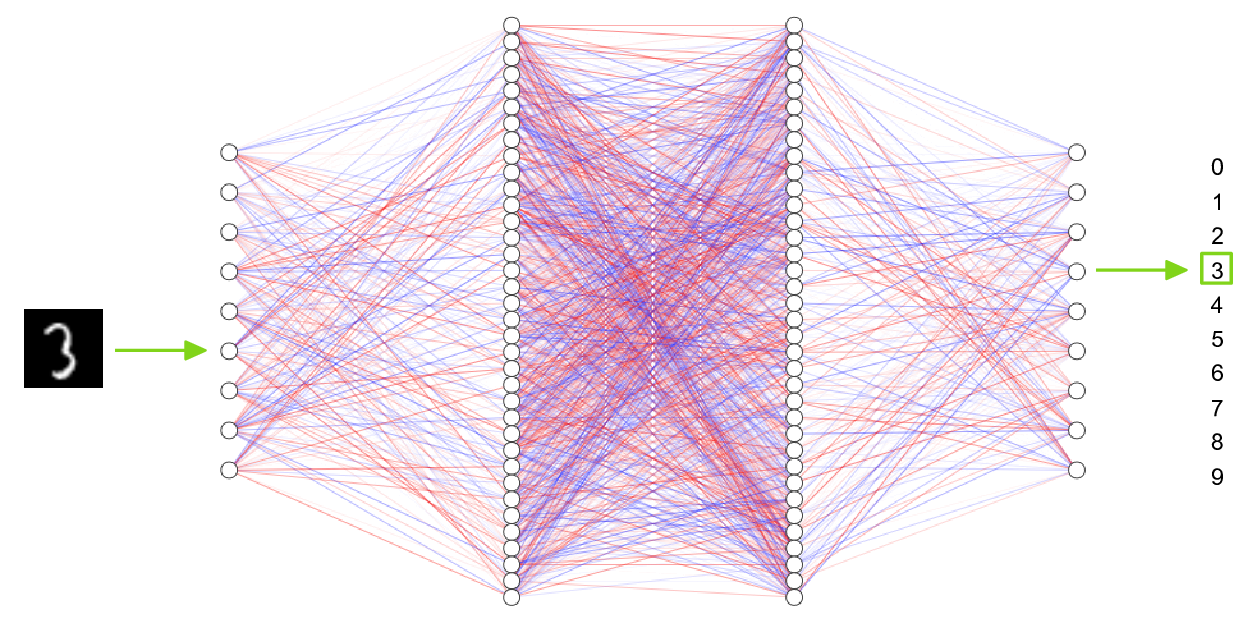

The goal is to build a neural network which can learn from the training set to properly identify the handwritten digits and which will perform well when presented with the testing set that it has never seen. This is a typical case of supervised learning.

Now, let's explore the MNIST with PyTorch.

Downloading and preparing the MNIST data

Where to store the data in the cluster

In Compute Canada clusters, a good place to store data shared amongst members of a project is in the /project file system.

You usually belong to /project/def-<group> , where <group> is the name of your PI. You can access it from your home directory through the symbolic link ~/projects/def-<group> .

In our training cluster, we are all part of the group def-sponsor00 , accessible through ~/projects/def-sponsor00 .

It would make little sense to all download the same MNIST data in different places…

We will thus all access the MNIST data in ~/projects/def-sponsor00/data .

How to obtain the data?

The dataset can be downloaded directly from the MNIST website, but the PyTorch package TorchVision has tools to download and transform several classic vision datasets, including the MNIST.

Transforming the data

We will transform the raw data to tensors and normalize it using the mean and standard deviation of the MNIST training data (0.1307 and 0.3081 respectively).

First, let's load the needed libraries:

import torch

from torchvision import datasets, transforms

from matplotlib import pyplot as pltThen, let's define a transformation:

transform = transforms.Compose([

transforms.ToTensor(),

transforms.Normalize((0.1307,), (0.3081,))

])Downloading the data

We can now download and transform the data.

Training data

train_data = datasets.MNIST(

'~/projects/def-sponsor00/data',

train=True, download=True, transform=transform)

train=True selects the training set of the MNIST.

Test data

Even though the mean and standard deviation of the test data are slightly different, it is important to normalize the test data in the same way.

test_data = datasets.MNIST(

'~/projects/def-sponsor00/data',

train=False, transform=transform)

train=False selects the test set.

I have already run these commands, so the data is already in ~/projects/def-sponsor00/data , ready to be used and you don't have to run anything.

Exploring the data

Data inspection

First, let's check the size of train_data:

print(len(train_data))

OK, that makes sense since the MNIST's training set has 60,000 pairs. train_data has 60,000 elements and we should expect each element to be of size 2 since it is a pair. Let's double-check with the first element:

print(len(train_data[0]))OK. So far, so good. We can print that first pair:

print(train_data[0])And you can see that it is a tuple with:

print(type(train_data[0]))What is that tuple made of?

print(type(train_data[0][0]))

print(type(train_data[0][1]))

It is made of the tensor for the first image (remember that we transformed the images into tensors when we created the objects train_data and test_data) and the integer of the first label (which you can see is 5 when you print train_data[0][1]).

So since train_data[0][0] is the tensor representing the image of the first pair, let's check its size:

print(train_data[0][0].size())That makes sense: a color image would have 3 layers of RGB values (so the size in the first dimension would be 3), but because the MNIST has black and white images, there is a single layer of values—the values of each pixel on a gray scale—so the first dimension has a size of 1. The 2nd and 3rd dimensions correspond to the width and length of the image in pixels, hence 28 and 28.

Run the following:

print(train_data[0][0][0]) print(train_data[0][0][0][0]) print(train_data[0][0][0][0][0])

And think about what each of them represents.

Then explore the test_data

object.

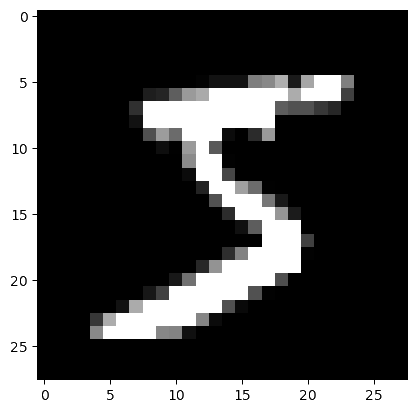

Plotting an image from the data

For this, we will use pyplot from matplotlib.

First, we select the image of the first pair and we resize it from 3 to 2 dimensions by removing its dimension of size 1 with torch.squeeze:

img = torch.squeeze(train_data[0][0])

Then, we plot it with pyplot, but since we are in a cluster, instead of showing it to screen with plt.show(), we save it to file:

plt.imshow(img, cmap='gray')This is what that first image looks like:

And indeed, it matches the first label we explored earlier (train_data[0][1]).

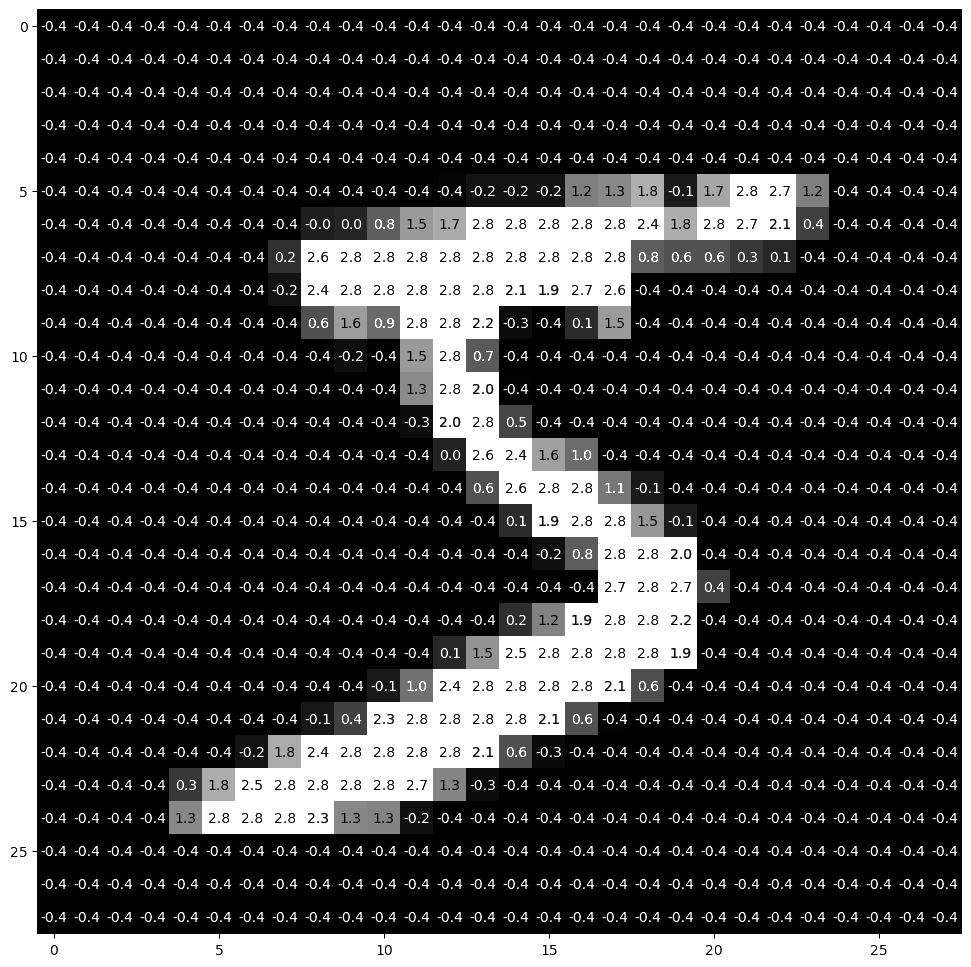

Plotting an image with its pixel values

We can plot it with more details by showing the value of each pixel in the image. One little twist is that we need to pick a threshold value below which we print the pixel values in white otherwise they would not be visible (black on near black background). We also round the pixel values to one decimal digit so as not to clutter the result.

imgplot = plt.figure(figsize = (12, 12))

sub = imgplot.add_subplot(111)

sub.imshow(img, cmap='gray')

width, height = img.shape

thresh = img.max() / 2.5

for x in range(width):

for y in range(height):

val = round(img[x][y].item(), 1)

sub.annotate(str(val), xy=(y, x),

horizontalalignment='center',

verticalalignment='center',

color='white' if img[x][y].item() < thresh else 'black')And this is what we get:

Batch processing

PyTorch provides the torch.utils.data.DataLoader class which combines a dataset and an optional sampler and provides an iterable (while training or testing our neural network, we will iterate over that object). It allows, among many other things, to set the batch size and shuffle the data.

So our last step in preparing the data is to pass it through DataLoader.

Training data

train_loader = torch.utils.data.DataLoader(

train_data, batch_size=20, shuffle=True)Test data

test_loader = torch.utils.data.DataLoader(

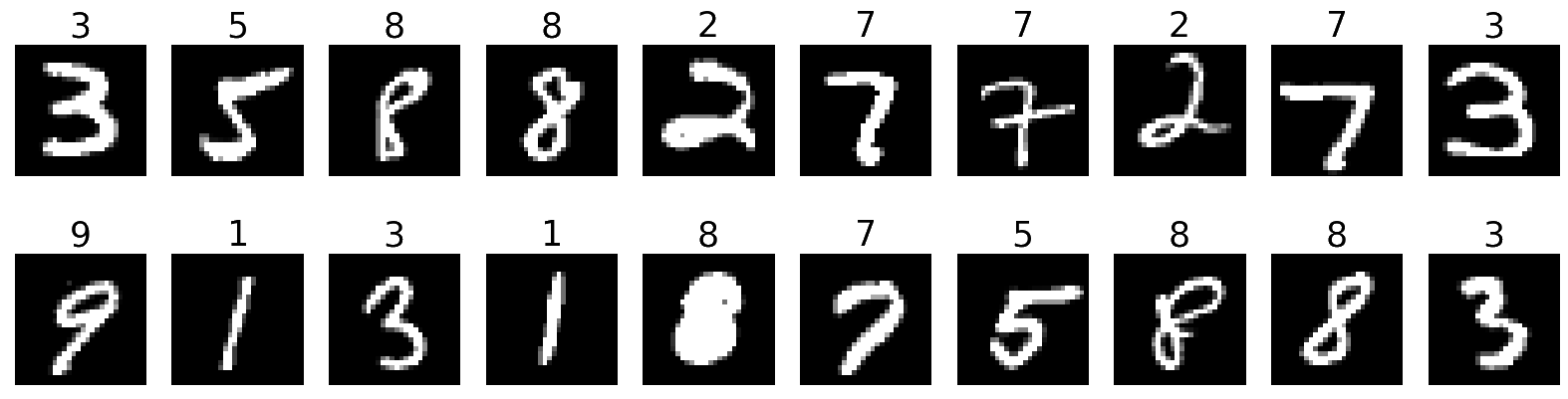

test_data, batch_size=20, shuffle=False)Plot a full batch of images with their labels

Now that we have passed our data through DataLoader, it is easy to select one batch from it. Let's plot an entire batch of images with their labels.

First, we need to get one batch of training images and their labels:

dataiter = iter(train_loader)

batchimg, batchlabel = dataiter.next()Then, we can plot them:

batchplot = plt.figure(figsize=(20, 5))

for i in torch.arange(20):

sub = batchplot.add_subplot(2, 10, i+1, xticks=[], yticks=[])

sub.imshow(torch.squeeze(batchimg[i]), cmap='gray')

sub.set_title(str(batchlabel[i].item()), fontsize=25)We get:

References

This lesson drew heavily on a model by Muhammad Haseeb.